e. Log in to Your Cluster

The pcluster ssh is a wrapper around SSH. Depending on the case, you can also log in to your head node using ssh and the public or private IP address.

You can list existing clusters using the following command. This is a convenient way to find the name of a cluster in case you forget it.

pcluster list --color -r $AWS_REGION

Now that your cluster has been created, log in to the head node using the following command in your AWS Cloud9 terminal:

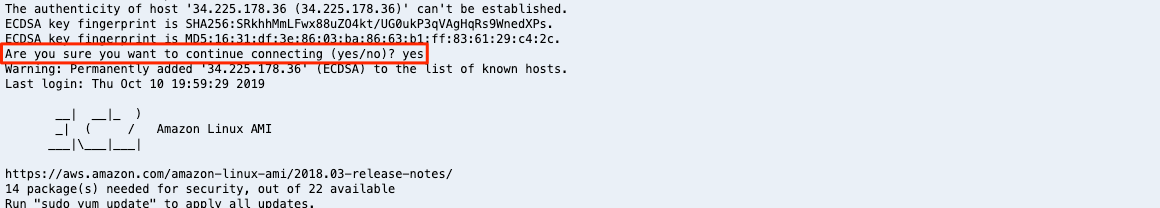

pcluster ssh hpc-cluster-lab -i ~/.ssh/$SSH_KEY_NAME -r $AWS_REGION

The EC2 instance asks for confirmation of the ssh login the first time you log in to the instance. Type yes.

Getting to Know your Cluster

Now that you are connected to the head node, familiarize yourself with the cluster structure by running the following set of commands.

SLURM

SLURM from SchedMD is one of the batch schedulers that you can use in AWS ParallelCluster. For an overview of the SLURM commands, see the SLURM Quick Start User Guide.

- List existing partitions and nodes per partition. You should see two nodes if your run this command after creating your cluster, and zero nodes if running it 10 minutes after creation (default cooldown period for AWS ParallelCluster, you don’t pay for what you don’t use).

sinfo

- List jobs in the queues or running. Obviously, there won’t be any since we did not submit anything…yet!

squeue

Module Environment

Lmod is a fairly standard tool used to dynamically change your environment.

- List available modules

module av

- Load a particular module. In this case, this command loads IntelMPI in your environment and checks the path of mpirun.

module load intelmpi

which mpirun

NFS Shares

- List mounted volumes. A few volumes are shared by the head-node and will be mounted on compute instances when they boot up. Both /shared and /home are accessible by all nodes.

showmount -e localhost

Next, you can run your first job!